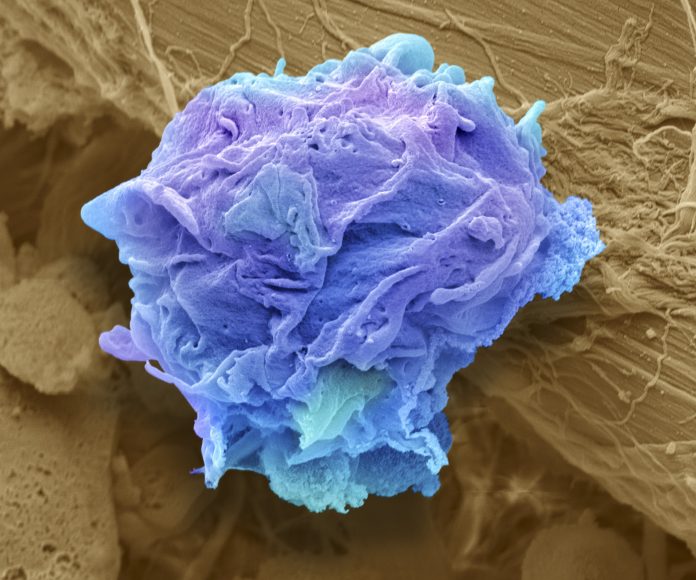

One of the largest studies to date shows AI-assisted image analysis can detect 90% of cases of lymphoma, cancer of the lymphatic system. The Scandinavian study used a model called Lars, (Lymphoma Artificial Reader System)—a deep learning system based on artificial intelligence.

Lymphoma is one of the most common cancers in the United States, accounting for about 4% of all cancers. All subjects in this study were current or former lymphoma patients, diagnosed histologically. “We used former patients with negative follow-up scans as our negative cases, i.e. former lymphoma patients,” lead author Ida Häggström, of Chalmers University of Technology, told Inside Precision Medicine.

Häggström developed the computer model in close collaboration with Sahlgrenska Academy at the University of Gothenburg and Sahlgrenska University Hospital. Their paper appeared in The Lancet Digital Health last month.

LARS works by inputting an image from positron emission tomography (PET) and analyzing it using the AI model. The model is trained to find patterns and features in the image, in order to make the best possible prediction of whether the image is positive or negative, i.e. whether it contains lymphoma or not.

“Based on more than 17,000 images from more than 5,000 lymphoma patients, we have created a learning system in which computers have been trained to find visual signs of cancer in the lymphatic system,” said Häggström.

In this study, the researchers examined image archives reaching back more than ten years to compare the patients’ final diagnosis with scans from PET and computed tomography (CT) taken before and after treatment. These images were used to train the AI computer model to detect signs of lymph node cancer from imaging.

“It’s about the fact that we haven’t programmed predetermined instructions in the model about what information in the image it should look at, but let it teach itself which image patterns are important in order to get the best predictions possible,” said Häggström.

She added that one challenge was to produce such a large amount of image material. It was also challenging to adapt the computer model so that it can distinguish between cancer and the temporary treatment-specific changes that can be seen in imaging after radiotherapy and chemotherapy.

“In the study, we estimated the accuracy of the computer model to be about ninety per cent, and especially in the case of images that are difficult to interpret, it could support radiologists in their assessments,” she said.

“We found that many of the false positives had patterns of infection/inflammation, treatment related uptake, or other non-malignant uptake such as spills. We could not find a reasonable explanation for the misclassification in only a few cases (29). The analysis is harder for false negatives. We did find some human annotation errors (13), but mostly unknown reasons,” Häggström told Inside Precision Medicine.

“We have made the computer code available now so that other researchers can continue to work on the basis of our computer model, but the clinical tests that need to be done are extensive,” said Häggström.

She points to other advantages of an AI-based system.

“An AI-based computer system for interpreting medical images also contributes to increased equality in healthcare by giving patients access to the same expertise and being able to have their images reviewed within a reasonable time, regardless of which hospital they are in. Since an AI system has access to much more information, it also makes it easier in rare diseases where radiologists rarely see images,” she said.